Web Scraping vs. Web Crawling: Understand the Difference

Anda Miuțescu on Jul 01 2021

What came first? The web crawler or the web scraper?

It depends on how you differentiate between extraction and downloading. Web scraping does not always necessitate the use of the Internet. Extracting information from a local system, a database, or using data scraping tools can be referred to as data collection. In the interim, web crawlers are primarily instructed to make a copy of all accessed sites for later processing by search engines, which will index the saved pages and search for the unindexed pages quickly.

This article aims to explain the differences and co-functionalities of web scraping, crawling, and everything in between. As a bonus, we have included an in-depth guide on building your own web crawler, so read on!

What is web scraping?

Web scraping, also known as data extraction, is the automated process of collecting structured information from the Internet. This umbrella term covers an extensive array of techniques and use cases involving the modus operandi of Big Data.

On the most basic level, web scraping refers to copying data from a website. Then, users can import the scraped data into a spreadsheet, a database, or utilize software to perform additional processing.

Who benefits from web scraping? Anyone in need of extensive knowledge regarding one particular subject. If you have ever ventured into any type of research, your first instinct was most likely to manually copy and paste data from sources to your local database.

Today, developers can easily use web scraping techniques thanks to automation tools. What used to take weeks for a team to complete can now be done autonomously in a matter of hours with complete accuracy.

Changing from manual to automated scraping saves time for individuals. It also gives an economic advantage to developers. The data collected by employing web scrapers can be later on exported to CSV, HTML, JSON, or XML format.

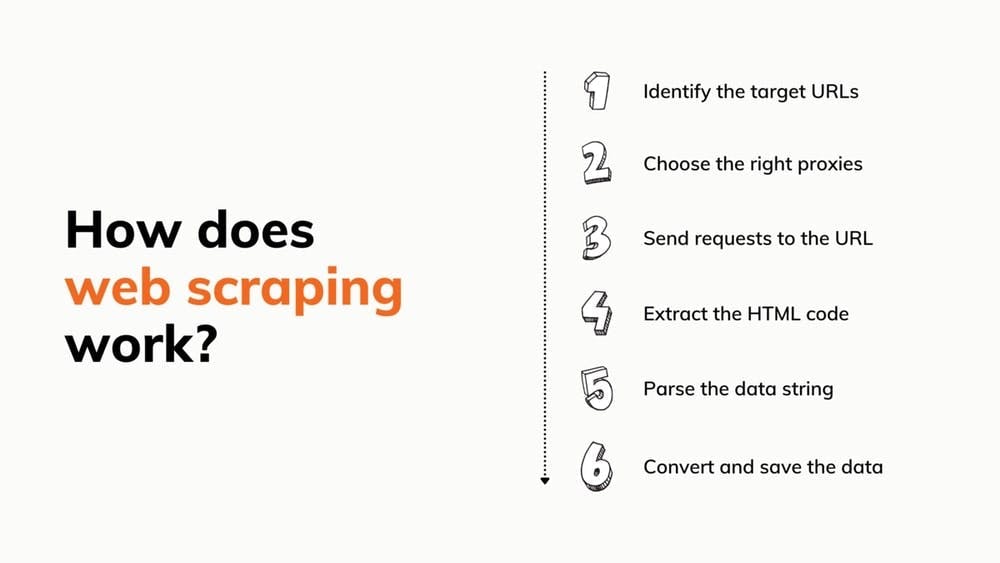

How does web scraping work?

Sounds easy, right? Well, building a scraper from 0 that can do all that is time-consuming. Not to mention that the bot might not always work and that you’ll need to rent proxies. However, if you still want a crack at it, we have some tutorials that will help.

However, one of the most enticing aspects of using a pre-built tool is how simple it is to incorporate it into your project. All that is necessary is a set of credentials and a rudimentary comprehension of the API documentation.

Also, pre-built scrapers may come with many other goodies:

- An incorporated headless browser to execute javascript

- A Captcha solver

- A proxy pool spanning millions of IPs

- A proxy rotator

- A simple interface to customize requests

Our team developed a web scraping API that will save you a lot of time by thoroughly researching the industry, and focusing our efforts on creating the most beneficial solution we could think of.

What is web crawling?

We all know and use Google, Bing, or other search engines. Using them is simple — you ask them for something and they look in every corner of the web to provide you with an answer. But, at the end of the day, Google thrives by the grace of its Googlebot crawler.

Web crawlers are used by search engines to scan the Internet for pages according to the keywords you input and remember them through indexing for later use in search results. Crawlers also aid search engines in gathering website data: URLs, hyperlinks, meta tags, and written content, as well as the inspection of the HTML text.

You don’t have to worry about the bot getting stuck in an endless loop of visiting the same sites because it keeps track of what it already accessed. Their behavior is also determined by a mixture of criteria such as:

- re-visit policy

- selection policy

- deduplication policy

- courtesy policy

Web crawlers face several obstacles, including the vast and ever-changing Public Internet and content selection. Umpteen pieces of information are posted on the daily. As a result, they would have to keep refreshing their indexes and sifting through millions of pages to get accurate results. Nevertheless, they are essential parts of the systems that examine website content.

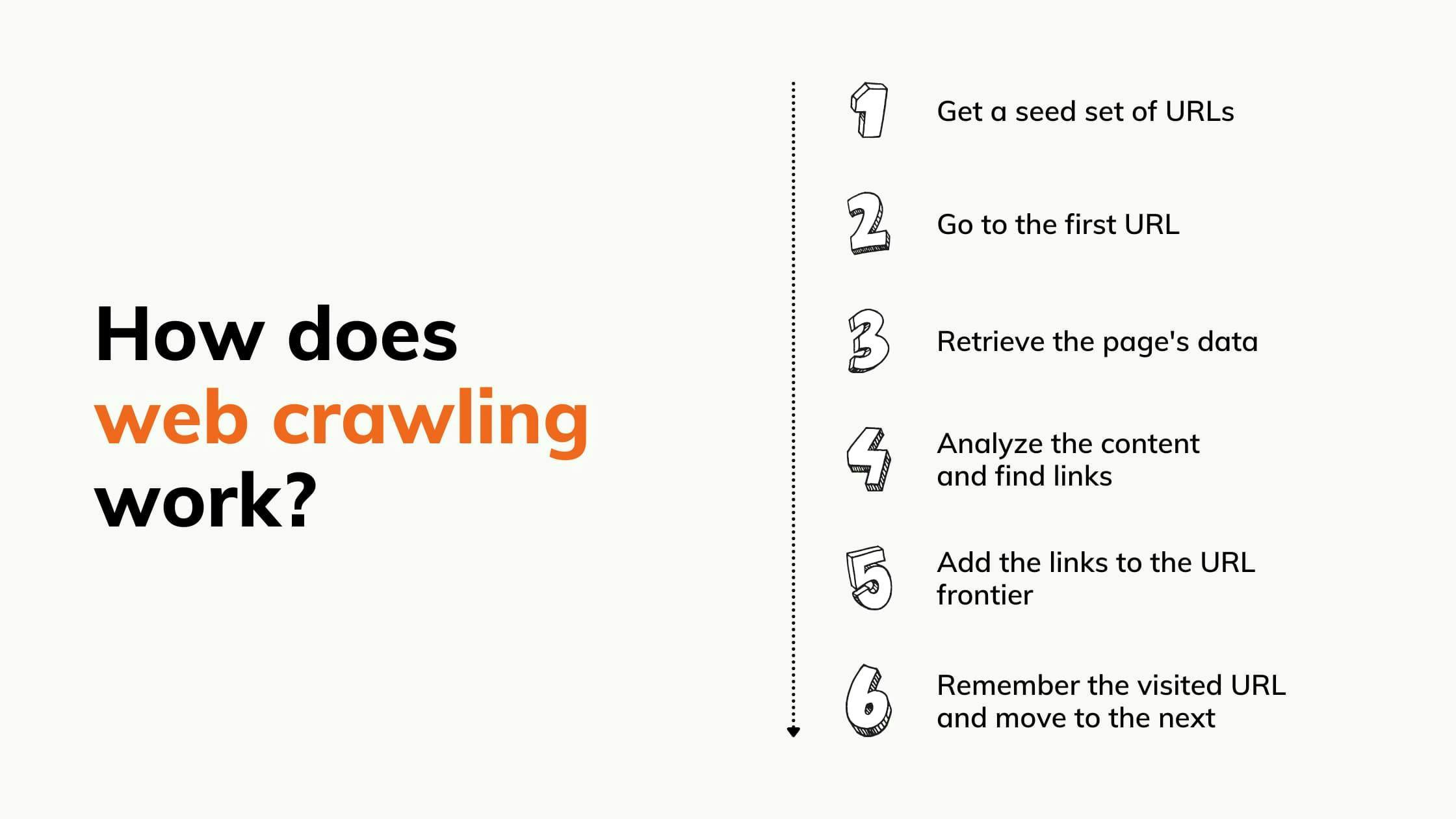

How does web crawling work?

Search engines don't have any way of telling what web pages are out there. Before they can obtain the relevant pages for keywords, the robots must crawl and index them. Here are the 7 comprehensive steps:

Web Scraping vs. Web Crawling

Web scraping is frequently confused with web crawling. Web scraping differs from web crawling in that it extracts and duplicates data from any page it accesses, whereas web crawling navigates and reads pages for indexing. Crawling checks for pages and content, scraping makes sure the data gets to you.

The myth that web scraping and web crawling work simultaneously is a misunderstanding we’re willing to mediate. Web scraping is a technique for extracting data from web pages. Whether they are crawled pages, all pages behind a particular site, or pages in digital archives, whilst web crawling can generate an URL list for the scraper to collect. For instance, when a company wants to gather information from a site, it will crawl the pages and then scrape the ones that hold valuable data.

Combining web crawling and web scraping leads to more automation and less hassle. Through crawling, you may produce a link list and then send it to the scraper so it knows what to extract. The benefit is collecting data from anywhere on the World Wide Web without any human labor.

Use cases

Web scraping and web crawling make an extremely good combination to quickly collect and process data that a human wouldn't be able to analyze in the same timeframe. Here are some examples of how this tandem can help in business:

Brand protection. You can use the tools to quickly find harmful online content to your brand (like patent theft, trademark infringement, or counterfeiting) and list it out so you can take legal action against the responsible parties.

Brand monitoring. Brand monitoring is a lot simpler when using a web crawler. The crawler can discover mentions of your company in the online environment and categorize them so that they're easier to understand, such as news articles or social media posts. Add web scraping to complete the process and get your hands on the information.

Price control. Companies use scraping to extract product data, analyze how it affects their sales model, as well as develop the best marketing and sales strategy. Crawlers, on the other hand, will look for new product pages that hold valuable info.

Email marketing. Web scraping can gather websites, forums, and comment sections at breakneck rates and extract all of the email addresses you need for your next campaign. Email crawling can even look through forums and chat groups, checking for emails that are hidden but can be found in the headers.

Natural language processing. In this case, the bots are used for linguistic research where machines assist in the interpretation of natural languages used by humans.

Crawlers and scrapers are used to provide huge volumes of linguistic data to these machines for them to gain experience. The more data sent to the machine, the faster it will achieve its ideal understanding level. Forums, marketplaces, and blogs that feature various types of reviews are the most frequent sites to get this type of information.

The Internet will be able to train it to eventually pick up and recognize slang, which is crucial in today's marketing and seeks to cater to a variety of backgrounds.

Real Estate Asset Management: In real estate, web crawlers and scrapers are often used for their ability to analyze market data and trends. Both provide detailed info on properties or specific groups of buildings, regardless of asset class (office, industrial, or retail), which helps leasing businesses get a competitive edge. To put things into perspective, the bots create insights that lead to better market forecasts and superior property management practices.

Lead Generation. Advertisements and special offers are useless if they don’t reach the right people. Companies use crawlers and scrapers to find those people, whether it’s on social media or business registries. The bots can quickly find and gather contact info that will then be sent to the sales or marketing team.

How to build a web crawler

Now that you know how the wheel goes ‘round, you’re probably wondering how to actually crawl websites. Building your own crawler saves money and is easier than you may think. On the basis thereof, we have prepared a detailed guide on the in’s and out’s, the how to’s, and everything in between.

Prerequisites

- Python3.

- Python IDE. Recommendation/In this guide, we will use Visual Studio Code, but any other IDE will do the trick.

- Selenium: to scrape the HTML of dynamic websites.

- Beautifulsoup: to parse the HTML document.

- ChromeDriver: a web driver to configure selenium. Download the right version and remember the path where you stored it!

Setup the environment

First, let’s install the libraries we need. Open a terminal in your IDE of choice and run the following commands:

> pip install selenium

> pip install beautifulsoup4

Now let’s import the libraries we installed into our Python code. We also define the URL that we’re going to crawl and add the configuration for selenium. Just create a crawler.py file and add the following:

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from bs4 import BeautifulSoup

CHROMEDRIVER_PATH = "your/path/here/chromedriver_win32/chromedriver"

BASE_URL = "https://ecoroots.us"

SECTION = "/collections/home-kitchen"

FULL_START_URL = BASE_URL + SECTION

options = Options()

options.headless = True

driver = webdriver.Chrome(CHROMEDRIVER_PATH, options=options)

Pick a website and inspect the HTML

We chose an e-Commerce website selling zero-waste products, and we will access the page of each product and extract its HTML. Therefore, we will look for all the internal links on the store’s website and access them recursively. But first, let’s take a look at the page structure and make sure we are not coming across any crawlability issues. Right-click anywhere on the page, then on Inspect element, and voila! The HTML is ours.

Building the crawler

Now we can begin writing the code. To build our crawler, we’ll follow a recursive flow so we’ll access all the links we encounter. But first, let’s define our entry point:

def crawl(url, filename):

page_body = get_page_source(url, filename)

soup = BeautifulSoup(page_body, 'html.parser')

start_crawling(soup)

crawl(FULL_START_URL, 'ecoroots.txt')

We implement the crawl function, which will extract the HTML documents through our get_page_source procedure. Then it will build the BeautifulSoup object that will make our parsing easier and call the start_crawling function, which will start navigating the website.

def get_page_source(url, filename):

driver.get(url)

soup = BeautifulSoup(driver.page_source, 'html.parser')

body = soup.find('body')

file_source = open(filename, mode='w', encoding='utf-8')

file_source.write(str(body))

file_source.close()

return str(body)

As stated earlier, the get_page_source function will use selenium to get the HTML content of the website and will write in a text file in the <body> section, as it’s the one containing all the internal links we are interested in.

unique_links = {}

def start_crawling(soup):

links = soup.find_all(lambda tag: is_internal_link(tag))

for link in links:

link_href = link.get('href')

if not link_href in unique_links.keys() or unique_links[link_href] == 0:

unique_links[link_href] = 0

link_url = BASE_URL + link_href

link_filename = link_href.replace(SECTION + '/products/', '') + '.txt'

crawl(link_url, link_filename)

unique_links[link_href] = 1This is the main logic of the crawler. Once it receives the BeautifulSoup object, it will extract all the internal links. We do that using a lambda function, with a few conditions that we defined in the is_internal_link function:

def is_internal_link(tag):

if not tag.name == 'a': return False

if tag.get('href') is None: return False

if not tag.get('href').startswith(SECTION + '/products'): return False

return True

This means that for every HTML element that we encounter, we first verify if it’s a <a> tag, if it has an href attribute, and then if the href attribute’s value has an internal link.

After we get the rundown of the links, we iterate each one of them, build the complete URL and extract the product’s name. With this new data, we have a new website that we pass to the crawl function from our entry point, so the process begins all over again.

But what if we encounter a link that we already visited? How do we avoid an endless cycle? Well, for this situation, we have the unique_links data structure. For every link we iterate, we verify if it was accessed before starting to crawl it. If it’s a new one, then we simply mark it as visited once the crawling it’s done.

Once you run your script, the crawler will start navigating through the website’s products. It may take a few minutes according to the size of the website you choose. Finally, you should now have a bunch of text files that will hold the HTML of every page that your crawler visits.

Final thoughts

Web crawling and web scraping are heavily intertwined and influence each other's success by contributing to the information that is ultimately processed. Hopefully, this article will help you assess the usage of these sister mechanisms and the environments they can be employed in.

Automation is the future of data collection. For that reason alone, we have come up with a solution that saves you the hassle of writing code, where you receive fast access to web content and avoid IP blocks. Before getting your budget in order, why not check out our free trial package with residential and mobile proxies included from the get-go? Scrape on.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Discover how to efficiently extract and organize data for web scraping and data analysis through data parsing, HTML parsing libraries, and schema.org meta data.

Automate web scraping and file downloads with Python and wget. Learn how to use these tools to gather data and save time.

This tutorial will demonstrate how to crawl the web using Python. Web crawling is a powerful approach for collecting data from the web by locating all of the URLs for one or more domains.