How to Use WebScrapingAPI To Scrape Any Website

Robert Munceanu on Apr 07 2021

If you are interested in web scrapers and want a solution that can extract various data from the Internet, you’ve come to the right place!

In this article, we will show you how easy it is to make use of WebScrapingAPI to obtain the information you need in just a few moments and manipulate the data however you like.

It is possible to create your own scraper to extract data on the web, but it would take a lot of time and effort to develop it, as there are some challenges you need to overcome along the way. And time is of the essence.

Without any further ado, let us see how you can extract data from any website using WebScrapingAPI. However, we’ll first go over why web scrapers are so valuable and how can they help you or your business achieve your growth goals.

How web scraping can help you

Web scraping can be useful for various purposes. Companies use data extraction tools in order to grow their businesses. Researchers can use the data to create statistics or help with their thesis. Let’s see how:

- Pricing Optimization: Having a better view of your competition can help your business grow. This way you know how the prices in the industry fluctuate and how can that influence your business. Even if you are searching for an item to purchase, this can help you compare prices from different suppliers and find the best deal.

- Research: This is an efficient way to gather information for your research project. Statistics and data reports are an important matter for your reports’ authenticity. Using a web scraping tool speeds up the process.

- Machine Learning: In order to train your AI, you need a big amount of data to work with, and extracting it by hand can take a lot of time. For example, if you want your AI to detect dogs in photos, you will need a lot of puppies.

The list goes on, but what you must remember is that web scraping is a very important tool as it has many uses, just like a swiss army knife! If you are curious when can web scraping be the answer to your problems, why not have a look?

Up next, you will see some features and how WebScrapingAPI can help to scrap the web and extract data like nobody’s watching!

What WebScrapingAPI brings to the table

You probably thought of creating your own web scraping tool rather than using a pre-made one, but there are many things you need to take into account, and these may take a lot of time and effort.

Not all websites want to be scraped, so they develop countermeasures to detect and block the bot from doing your bidding. They may use different methods, such as CAPTCHAs, rate limiting, and browser fingerprinting. If they find your IP address to be a bit suspicious, well, chances are you won’t be scraping for too long.

Some websites want to be scraped only in certain regions around the world, so you must use a proxy in order to access their contents. But managing a proxy pool isn’t an easy task either, as you need to constantly rotate them to remain undetected and use specific IP addresses for geo-restricted content.

Despite all these problems, WebScrapingAPI takes these weights off your shoulders and solves the issues with ease, making scraping sound like a piece of cake. You can have a look and see for yourself what roadblocks may appear in one’s web scraping!

Now that we know how WebScrapingAPI can help us, let’s find out how to use it, and rest assured it’s pretty easy too!

How to use WebScrapingAPI

API Access Key & Authentication

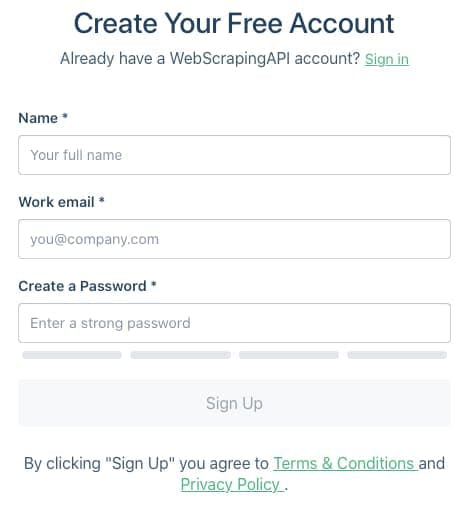

First things first, we need an access key in order to use WebScrapingAPI. To acquire it, you need to create an account. The process is pretty straightforward, and you don’t have to pay anything, as there’s a free subscription plan too!

After logging in, you will be redirected to the dashboard, where you can see your unique Access Key. Make sure you keep it a secret, and if you ever think your unique key has been compromised, you can always use the “Reset API Key” button to get a new one.

After you got ahold of your key, we can move to the next step and see how we can use it.

Documentation

It is essential to know what features WebScrapingAPI has to help with our web scraping adventure. All this information can be found in the documentation presented in a detailed manner, with code samples in different programming languages. All this to better understand how things work and how they can be integrated within your project.The most basic request you could make to the API is setting the api_key and url parameters to your access key and the URL of the website you want to scrape, respectively. Here is a quick example in Python:

import http.client

conn = http.client.HTTPSConnection("api.webscrapingapi.com")

conn.request("GET", "/v1?api_key=XXXXX&url=http%3A%2F%2Fhttpbin.org%2Fip")

res = conn.getresponse()

data = res.read()

print(data.decode("utf-8"))

WebScrapingAPI has other features that can be used for scraping. Some of them can be exploited just by setting a few more parameters, and others are already implemented in the API, which we talked about earlier.

Let’s see a few other parameters we can set and why they are useful for our data extraction:

- render_js: Some websites may render essential page elements using JavaScript, meaning that some content won’t be shown on the initial page load and won’t be scraped. Using a headless browser, WSA is able to render this content and scrape it for you to make use of. Just set render_js=1, and you are good to go!

- proxy_type: You can choose what type of proxies to use. Here is why proxies are so important and how the type of proxy can have an impact on your web scraping.

- country: Geolocation comes in handy when you want to scrape from different locations, as the content of a website may be different, or even exclusive, depending on the region. Here you set the 2-letter country code supported by WSA.

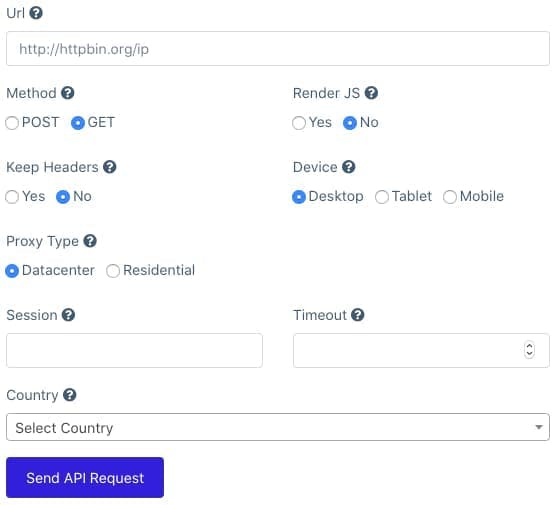

API Playground

If you want to see the WebScrapingAPI in action before integrating it within your project, you can use the playground to test some results. It has a friendly interface and it is easy to use. Just select the parameters based on the type of scraping you want to do and send the request.

In the result section, you will see the output after the scraping is done and the code sample of said request in different programming languages for easier integration.

API Integration

How can we use WSA within our project? Let’s have a look at this quick example where we scrape Amazon’s to find the most expensive Graphics Card on a page. This example is written in JavaScript, but you can do it in any programming language you feel comfortable with.

First, we need to install some packages to help us out with the HTTP request (got) and parsing the result (jsdom) using this command line in the project’s terminal:

npm install got jsdom

Our next step is to set the parameters necessary to make our request:

const params = {

api_key: "XXXXXX",

url: "https://www.amazon.com/s?k=graphic+card"

}This is how we prepare the request to WebScrapingAPI to scrape the website for us:

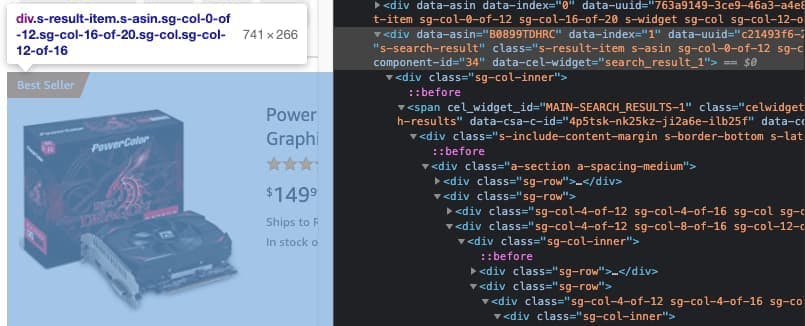

const response = await got('https://api.webscrapingapi.com/v1', {searchParams: params})Now we need to see where each Graphics Card element is located inside the HTML. Using the Developer Tool, we found out that the class s-result-item contains all the details about the product, but we only need its price.

Inside the element, we can see there is a price container with the class a-price and the subclass a-offscreen where we will extract the text representing its price.

WebScrapingAPI will return the page in HTML format, so we need to parse it. JSDOM will do the trick.

const {document} = new JSDOM(response.body).windowAfter sending the request and parsing the received response from WSA, we need to filter the result and extract only what is important for us. From the previous step, we know that the details of each product are in the s-result-item class, so we iterate over them. Inside each element, we check if the price container class a-price exists, and if it does, we extract the price from the a-offscreen element inside it and push it into an array.

Finding out which is the most expensive product should be child’s play now. Just iterate through the array and compare the prices between one another.

Wrapping it up with an async function and the final code should look like this:

const {JSDOM} = require("jsdom");

const got = require("got");

(async () => {

const params = {

api_key: "XXX",

url: "https://www.amazon.com/s?k=graphic+card"

}

const response = await got('https://api.webscrapingapi.com/v1', {searchParams: params})

const {document} = new JSDOM(response.body).window

const products = document.querySelectorAll('.s-result-item')

const prices = []

products.forEach(el => {

if (el) {

const priceContainer = el.querySelector('.a-price')

if (priceContainer) prices.push(priceContainer.querySelector('.a-offscreen').innerHTML)

}

})

let most_expensive = 0

prices.forEach((price) => {

if(most_expensive < parseFloat(price.substring(1)))

most_expensive = parseFloat(price.substring(1))

})

console.log("The most expensive item is: ", most_expensive)

})();Final thoughts

We hope this article has shown you how useful a ready-built web scraping tool can be and how easy it is to use it within your project. It takes care of roadblocks set by websites, helps you scrape over the Internet in a stealthy manner, and can also save you a lot of time.

Why not give WebScrapingAPI a try? See for yourself how useful it is if you haven’t already. Creating an account is free and 1000 API calls can help you start your web scraping adventure.

Start right now!

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore the complexities of scraping Amazon product data with our in-depth guide. From best practices and tools like Amazon Scraper API to legal considerations, learn how to navigate challenges, bypass CAPTCHAs, and efficiently extract valuable insights.

Learn what’s the best browser to bypass Cloudflare detection systems while web scraping with Selenium.

Discover how to efficiently extract and organize data for web scraping and data analysis through data parsing, HTML parsing libraries, and schema.org meta data.