SERP Scraping API - Start Guide

WebscrapingAPI on Aug 22 2023

Web Scraping API offers a suite of cloud based scrapers, which include:

- The Scraper API - designed for general purpose web scraping

- The SERP API - designed for real time Google scraping and other search engines

- The Amazon API - designed for real time Amazon scraping

As the title implies, for today’s article, we will focus our attention on the SERP API and how it can enable you to gather real time information from Google and other search engines.

Use Cases For The SERP Scraper API

There are numerous reasons one would want to use a web scraper to scrape data from some of the biggest search engines. Take Google for example. Scraping Google search can return valuable information on competitors, your website’s position on Google and so on. In general, here are a couple of advantages of using the SERP Scraper API:

- Market Analysis - Web scraping search engine results can provide valuable insights into market trends, customer preferences, and competitor strategies. By analyzing search engine rankings and keyword trends, businesses can identify opportunities, monitor their competitors' activities, and make informed decisions to stay ahead in their industry.

- Search Engine Optimization (SEO) - SEO professionals and website owners can assess their website's visibility and ranking performance for specific keywords. This data allows them to optimize their content, identify areas for improvement, and fine-tune their SEO strategies to increase organic traffic and visibility.

- Topic Research - The SERP Scraper API can be used to gather information on popular topics, frequently asked questions, and user preferences. This data can be leveraged to create relevant and engaging content that resonates with the target audience, thereby increasing the chances of driving more traffic to a website and establishing authority in a particular niche.

Why Sign Up For The SERP Scraping API

Scraping Google in particular (and other search engines in general) is one of the most challenging tasks in web scraping. And that is because search engines detect automated activity and block access for such users. Their detection mechanism varies from one search engine to another, however, it usually involves detecting the browser’s fingerprint, the IP address and some other aspects (such as mouse movement on page for example).

This being said, you can imagine how setting up a web scraper that can successfully scrape these sites on long term is quite hard. With Web Scraping API’s SERP Scraping API on the other hand, all of these issues (and much more) are taken care of:

- Rotating residential proxies - To ensure a high success rate, only the most qualitative IP addresses are selected for the SERP scraping API.

- Unique fingerprint - We use real and unique browser fingerprints, so that all requests look like they originate from a real browser.

- Custom stealth - A team of experts is constantly working on patching browser properties that may expose automated activity.

Apart from that, when signing up for the SERP API, you sign up for the entire infrastructure and team behind it. You can easily scale your project at any time. Also, if you ever encounter issues or have any questions, when you reach out to support, you will end up talking to one of the engineers working on the actual API. This way, at Web Scraping API, we ensure the lowest response times and the highest level of technical support for our users.

How To Sign Up For Our Free Cloud Based SERP And Google Scraper

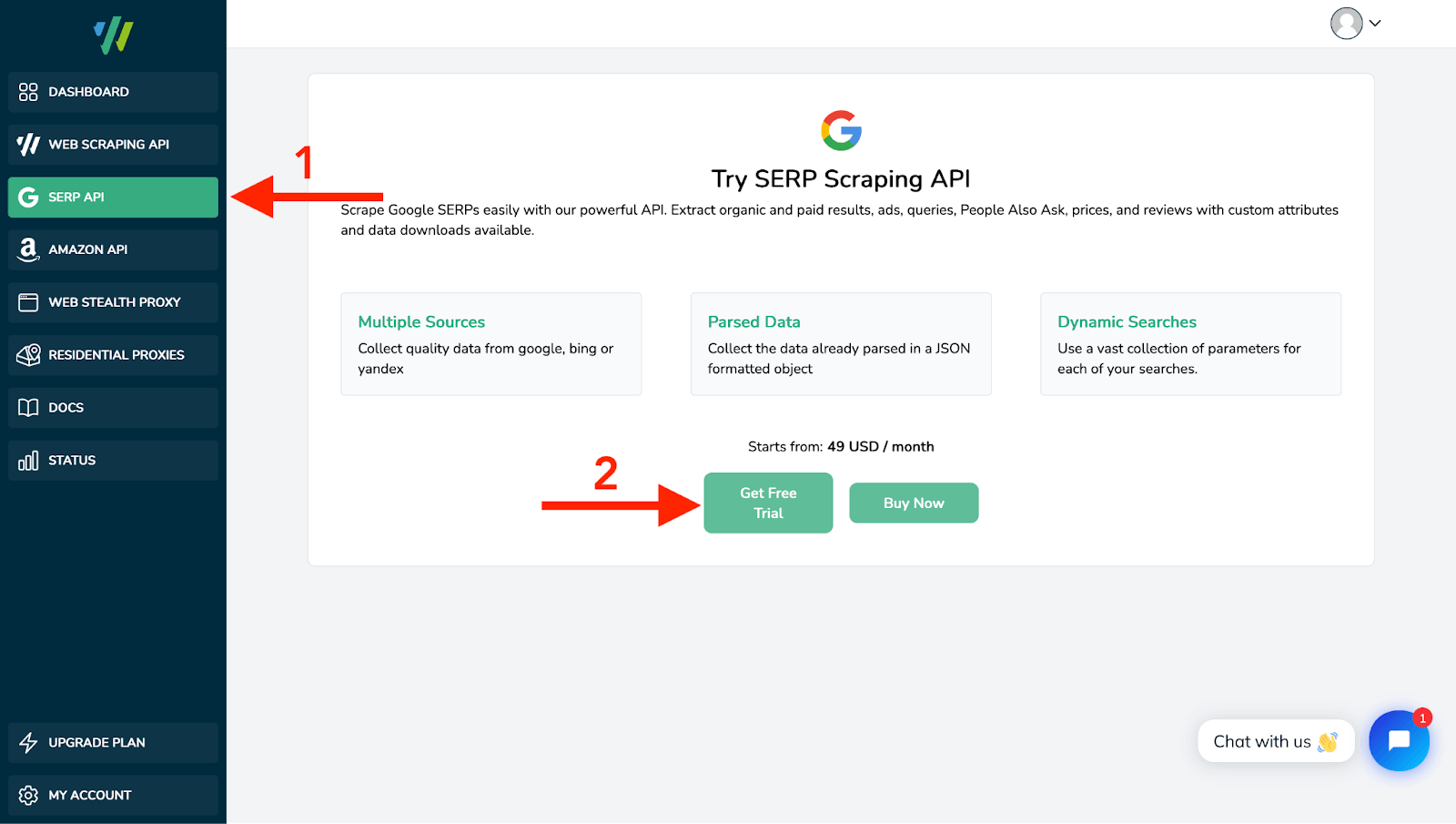

Signing up to our Google scraper is as easy as creating an account. To get started with the SERP Scraper API, visit our Sign Up Page and simply create an account. Once the account is active, you have the option to enable one (or all for that matter) of the scrapers we discussed at the beginning of this article. Again, since our focus is on the SERP Scraper API, to activate a free trial:

- Click on the SERP API button

- Click on the “Get Free Trial” button

You will then get a full access trial for the next 7 days. If you wish to continue using the fully featured Google scraper, you can purchase one of our plans. Otherwise, you will be downgraded to our free tier and still get access to the API.

What Is Included With Full Access Plans vs Free Tier

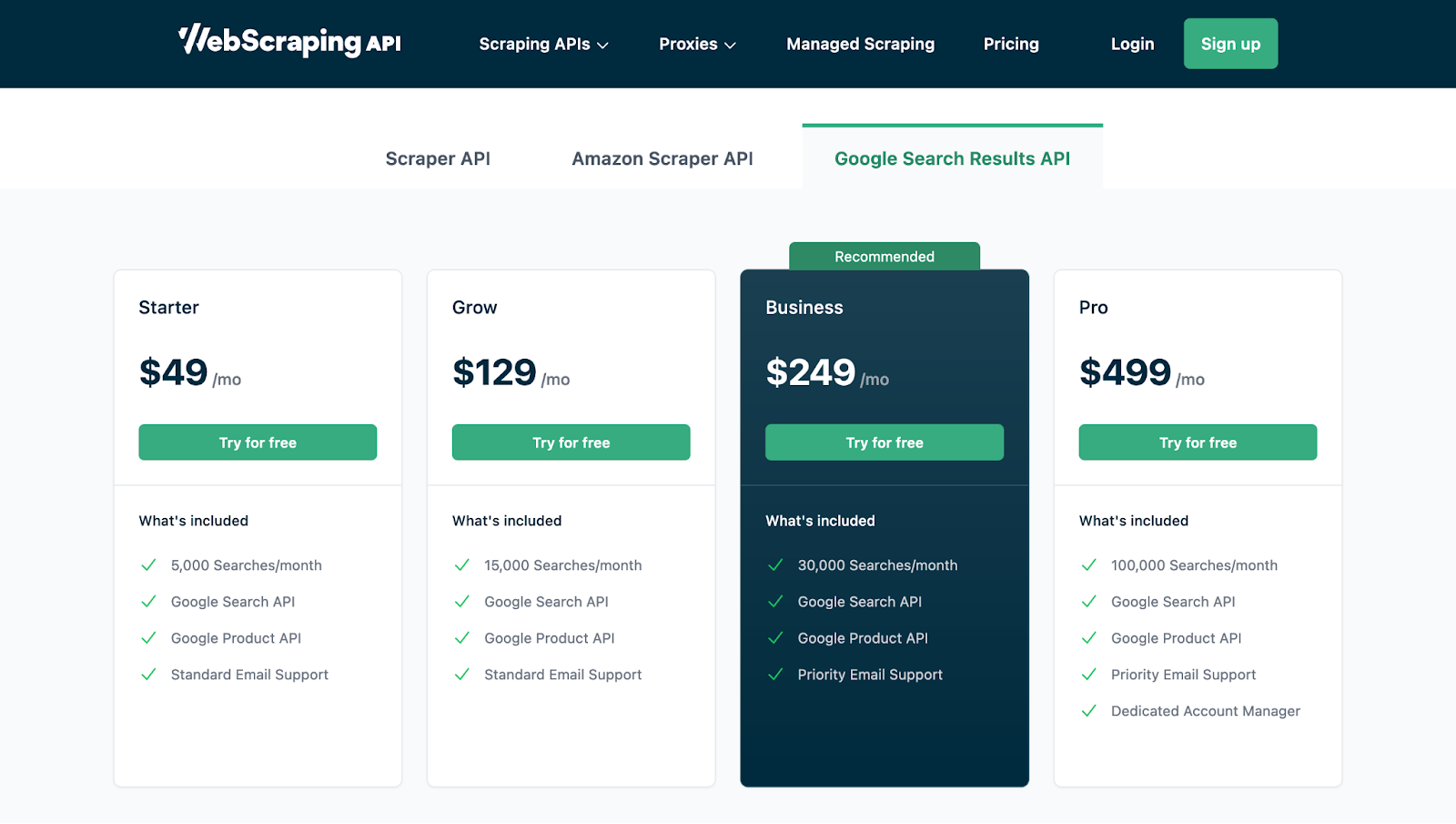

During the 7 days free trial you get access to all features of our SERP scraping API. To continues with the full access, you may choose between the following plans:

To get updated pricing information, I recommend you visit our Pricing Page and click on the Google Search Results API tab. As a general rule, all paid plans have similar features included, with minor exceptions. For example, from the Business plan up, you get prioritized email support. Also, the Pro and Enterprise plans get a dedicated account manager.

We offer a Free tier as well, which includes 100 API credits per month, meaning that it will give you full access to the API and you get to access it 100 times per month. Also, if one of your calls is unsuccessful (i.e. it gets blocked by a captcha), the credit for the call won’t get deducted from your account.

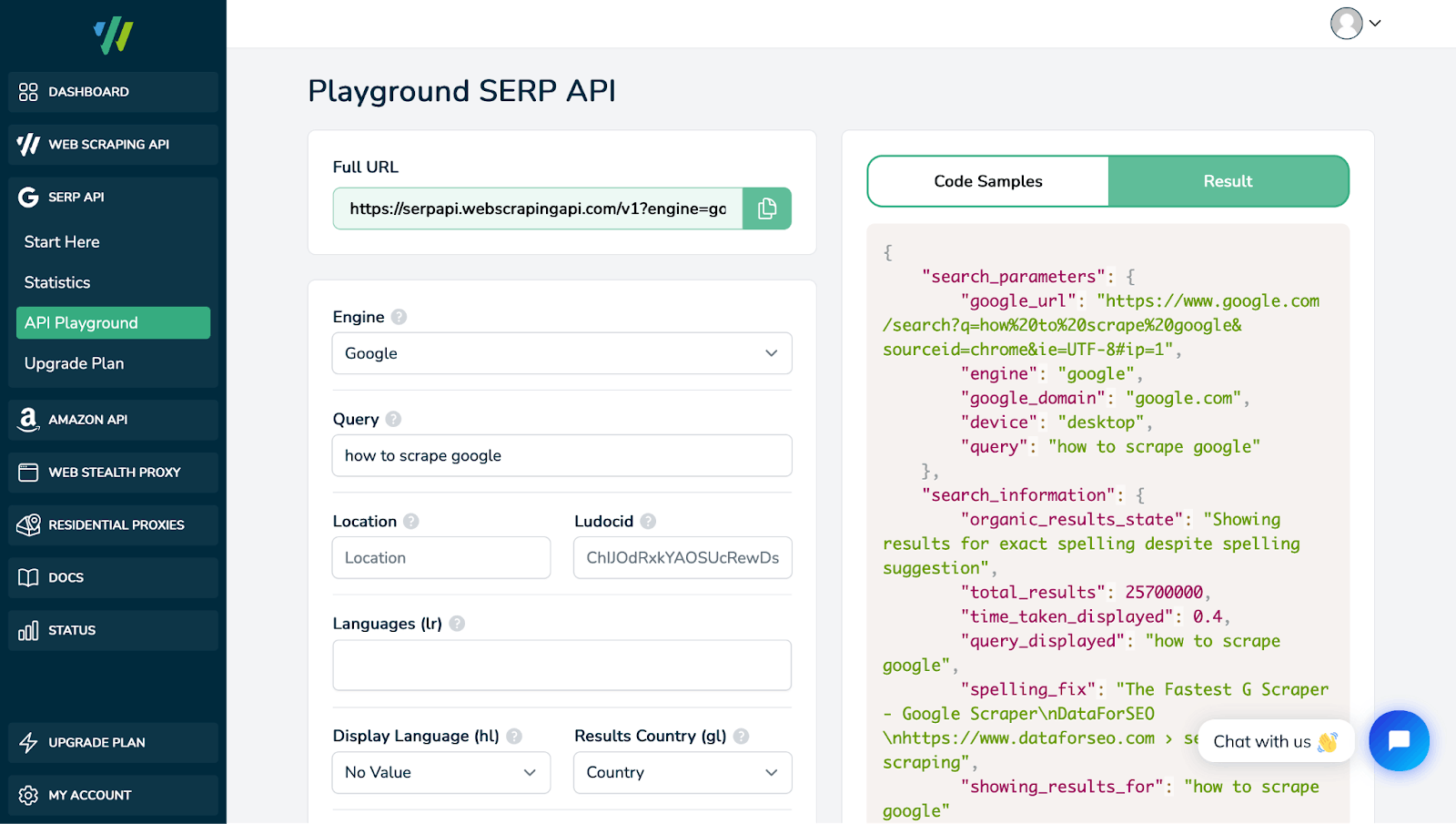

How To Use The SERP Scraper API

Interacting with the SERP Scraper API is quite easy, both for experienced developers and for non-technical users. For developers, we encourage you to check out our extensive documentation. For non-technical users, we’ve built a playground inside the Dashboard, which can be used to customize the Google scraper API and get the response in a JSON format.

If you are a developer, you may want to interact programmatically with the SERP scraping API. And then again, you can find plenty of resources on our Documentation and you can as well generate code samples inside the playground. In the next section, we will be discussing some of the technical aspects of the API, so that you can get a better understanding of it.

Authenticating API Requests

To authenticate your requests with our API, all you are required to do is to pass the `api_key` query parameter along with the request. This way, we can identify your account and accept the request. The endpoint at which you can access the SERP Scraper API is:

https://serpapi.webscrapingapi.com/v1?api_key=<YOUR_API_KEY>

Available SERP Scraper Engines

Signing up for our SERP Scraper API gives you access to a lot of engines. For example, you can use it to scrape Google Search and other Google pages, or you can use it to scrape Bing or even Yandex. A full list of supported engines is available on our Documentation and what I would like to highlight here is that, in order to activate an engine, you simply need to pass the appropriate value to the `engine` query parameter:

https://serpapi.webscrapingapi.com/v1?api_key=<YOUR_API_KEY>&engine=<ENGINE>

For example, if you want to scrape Google search results, you will have to send your requests to:

https://serpapi.webscrapingapi.com/v1?api_key=<YOUR_API_KEY>&engine=google

Customizing The Scraping Engine

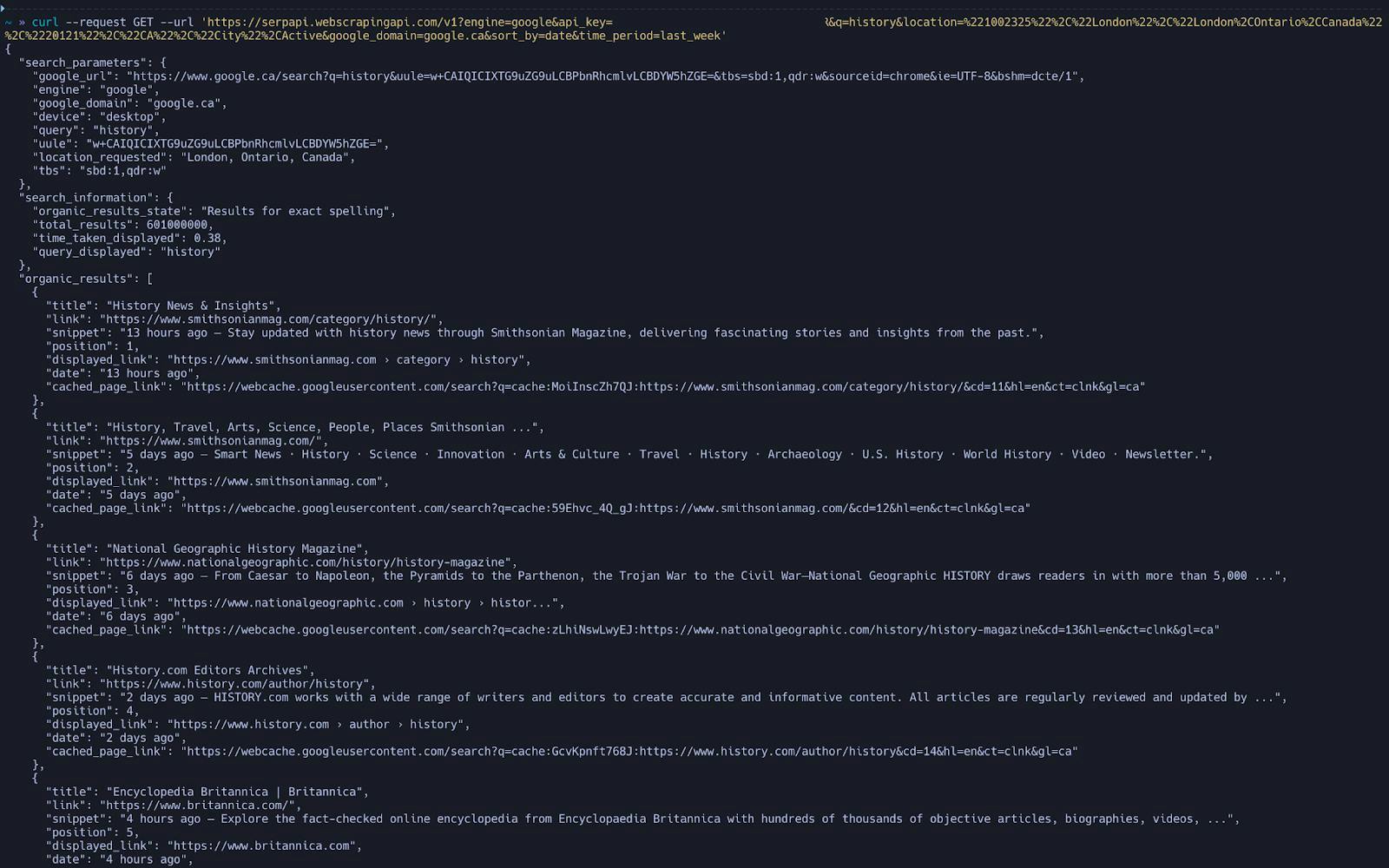

As a general rule, we customize our scrapers using query parameters. However, each engine has unique properties and it would be quite hard to discuss all of them here (especially because they have been included in the Documentation). To help you understand how query parameters are used to customize the SERP Scraper API, we’ll take for example the Google Search Scraper. Let’s say our desired HTTP client is curl and we want to scrape Google search results for:

- Keyword: history

- User Location: London, Ontario, Canada

- Google Domain: google.ca

- Sorted by: date

- Period: last week

Which simply translates to: “scrape all results from Googe Canada for the ‘history’ keyword, get results from last week and sort them by date”. Then we would send the following request:

~> curl --request GET --url 'https://serpapi.webscrapingapi.com/v1?engine=google&api_key=<YOUR_API_KEY>&q=history&location=%221002325%22%2C%22London%22%2C%22London%2COntario%2CCanada%22%2C%2220121%22%2C%22CA%22%2C%22City%22%2CActive&google_domain=google.ca&sort_by=date&time_period=last_week'

The parameters (apart from the engine and api_key) used to customize this request are:

- `q=history` - to specify the keyword

- `location=%221002325%22%2C%22London%22%2C%22London%2COntario%2CCanada%22%2C%2220121%22%2C%22CA%22%2C%22City%22%2CActive` - to acces results af if the user is located in London, Ontario, Canada

- `google_domain=google.ca` - to specify the Google URL

- `sort_by=date` - to sort results by date

- `time_period=last_week` - to only get results from last week

Conclusions

Having access to real time SERP information is both challenging and important. On one hand, building a reliable SERP scraper from the ground up can take up time and resources. On the other hand, access to scraped information can give you a competitive advantage. With our SERP Scraping API you can get instant access to both.

Moreover, using an established cloud based scraper is typically more cost-effective than building your own scraper. This is due to various factors, including shared costs and the availability of existing scalable infrastructure.

We hope this guide will help you set up your scraping project. If you have any questions, please check out our Documentation or reach out to our support team! We look forward to helping you succeed!

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Top 7 Google SERP APIs Compared: WebScrapingAPI, Apify, Serp API & More - Best Value for Money, Features, Pros & Cons

Learn how to scrape Google Maps reviews with our API using Node.js. Get step-by-step instructions on setting up, extracting data, and overcoming potential issues.

Learn how to use Playwright for web scraping and automation with our comprehensive guide. From basic setup to advanced techniques, this guide covers it all.